Memory is a crucial part of the code optimisation process. Go inherits many its features from the C family language. We are accustomed to think that Go does not require deep-level knowledge to write efficient programs. It does not work that way and the struct type is a very good evidence.

A struct is a special data type that organises related variables together. These data types often occupy many bytes of memory, which has a significant impact on performance. Let’s recall how the CPU fetches data from memory.

Computer architecture

In computer architecture, two critical factors influencing a system’s performance are memory size and word length.

Memory size influences the volume of data readily accessible to the processor, impacting the system’s ability to manage large applications and datasets efficiently. A larger memory allows for more data and programs to reside in RAM simultaneously, reducing the need for slower disk access.

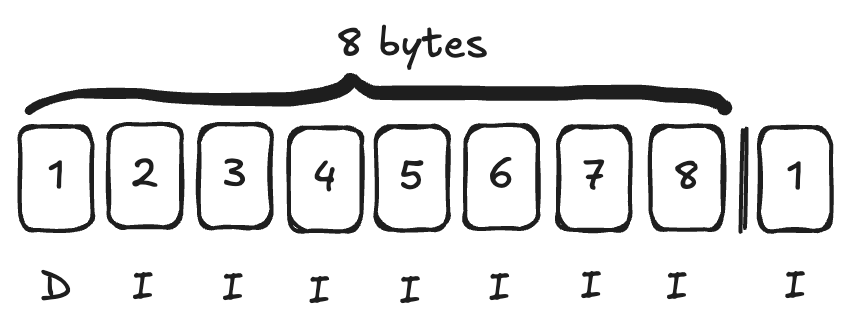

Word length determines the amount of data a processor can handle per operation, directly affecting computational speed and precision. Common word lengths include 8, 16, 32, or 64 bits. For instance, a 32-bit processor can process 4 bytes at a time, whereas a 64-bit processor can handle 8 bytes per cycle, effectively doubling the data throughput per cycle.

Traditionally, a word in computer architectures was generally the smallest addressable unit of memory. And traditionally this has been the same as the machine’s general purpose register size.

Go struct

Having some basics under our belt, let’s move forward to scrutinise a real example in Go. All the tests were performed on 64-bit machine.

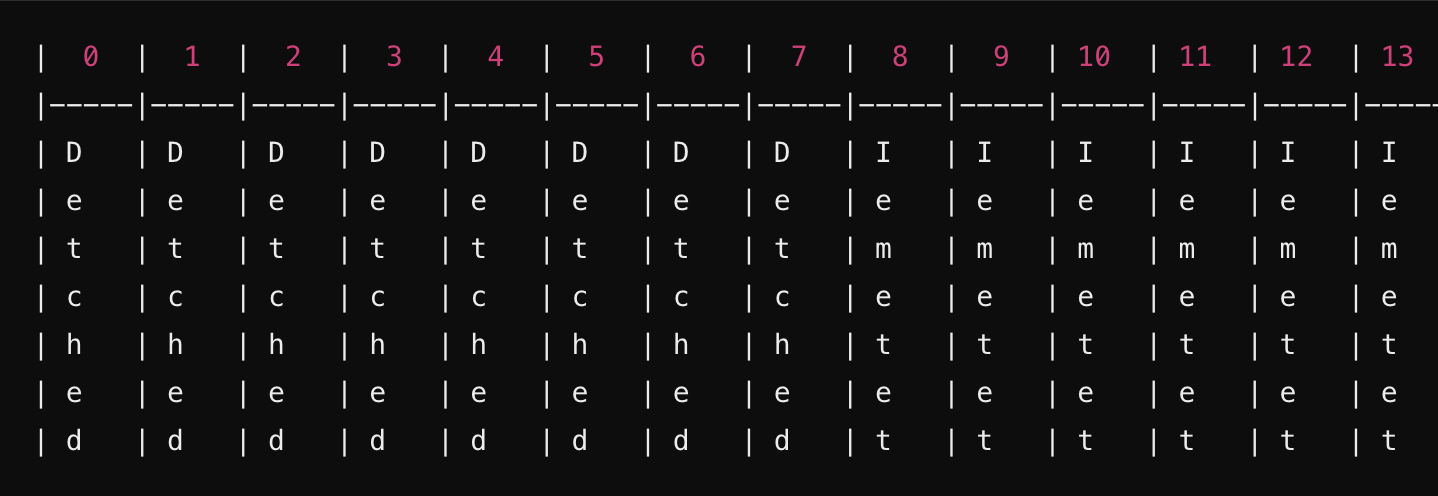

The following House struct is designed with an arbitrary field order.

type House struct {

Detached bool // 1 byte

ID int64 // 8 bytes

Perimeter int32 // 4 bytes

Height int32 // 4 bytes

Surface float32 // 4 bytes

}Assuming we run the code on 64-bit machine, the anticipated memory usage should be 21 bytes.

package main

import (

"fmt"

"unsafe"

)

type House struct {

Detached bool // 1 byte

ID int64 // 8 bytes

Perimeter int32 // 4 bytes

Height int32 // 4 bytes

Surface float32 // 4 bytes

}

func main() {

var h House

var expectedSize = 1 + 8 + 4 + 4 + 4

fmt.Printf("Expected size of House %d, got %d\n", expectedSize, unsafe.Sizeof(h))

}The result diverges from the expectations:

Expected size of House 21, got 32

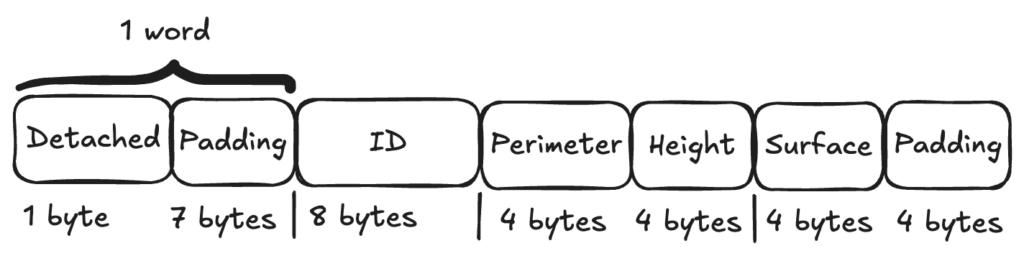

The actual size is 32 bytes (4*8 bytes), not 21 bytes. The discrepancy arises from memory alignment.

The CPU optimises the reading process to get the data in a single operation. Without alignment, the ID field would span over 2 words and be accessible within 2 cycles, which outlines the following picture. The first 8 contiguous bytes are shared between the Detached field and the most part of the ID field.

Memory optimisation

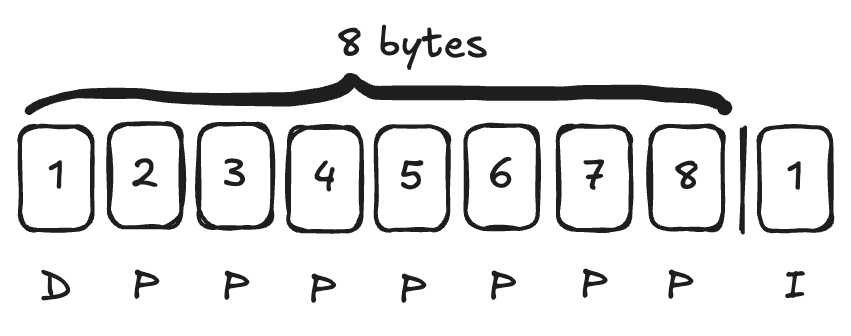

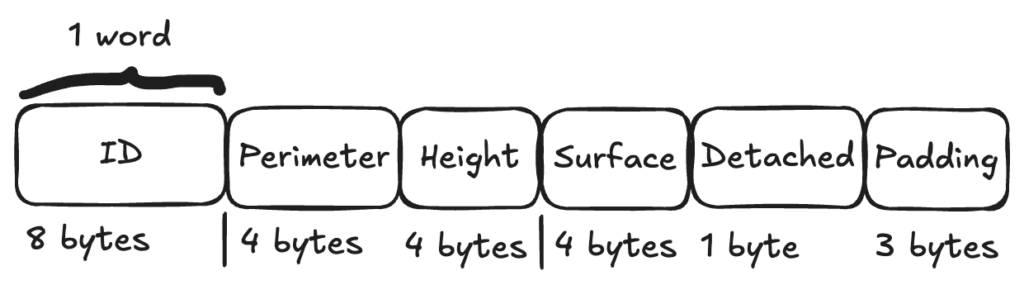

Knowing better the mechanic of the Go language, we should be capable of rearranging the struct to optimise the memory utilisation.

type HouseOptimised struct {

ID int64 // 8 bytes

Perimeter int32 // 4 bytes

Height int32 // 4 bytes

Surface float32 // 4 bytes

Detached bool // 1 byte

}The fields now are reordered to fit the CPU’s word size.

package main

import (

"fmt"

"unsafe"

)

type HouseOptimised struct {

ID int64 // 8 bytes

Perimeter int32 // 4 bytes

Height int32 // 4 bytes

Surface float32 // 4 bytes

Detached bool // 1 byte

}

type House struct {

Detached bool // 1 byte

ID int64 // 8 bytes

Perimeter int32 // 4 bytes

Height int32 // 4 bytes

Surface float32 // 4 bytes

}

func main() {

var h House

var ho HouseOptimised

expectedHouseSize := 1 + 8 + 4 + 4 + 4

expectedHouseOptimizedSize := 8 + 4 + 4 + 4 + 1

fmt.Printf("Expected size of House %d, got %d\n", expectedHouseSize, unsafe.Sizeof(h))

fmt.Printf("Expected size of HouseOptimised %d, got %d\n", expectedHouseOptimizedSize, unsafe.Sizeof(ho))

}It results in less memory burden, and the actual size is 24 bytes (3 * 8 bytes) instead of 32 given earlier.

Expected size of House 21, got 32

Expected size of HouseOptimised 21, got 24

Benchmark

We have been convinced that the memory occupation has been decreased. Let’s consider how it reflects on the benchmark test.

package main

import "testing"

type HasPerimeter interface {

GetPerimeter() int32

}

type House struct {

Detached bool // 1 byte

Id int64 // 8 bytes

Perimeter int32 // 4 bytes

Height int32 // 4 bytes

Surface float32 // 4 bytes

}

func (h House) GetPerimeter() int32 {

return h.Perimeter

}

type EnhancedHouse struct {

Id int64 // 8 bytes

Perimeter int32 // 4 bytes

Height int32 // 4 bytes

Surface float32 // 4 bytes

Detached bool // 1 byte

}

func (eh EnhancedHouse) GetPerimeter() int32 {

return eh.Perimeter

}

const sampleSize = 1000

var Houses = make([]House, sampleSize)

var EnhancedHouses = make([]EnhancedHouse, sampleSize)

func init() {

for i := 0; i < sampleSize; i++ {

Houses[i] = House{

Detached: false,

Id: int64(i),

Perimeter: int32(i),

Height: int32(i),

Surface: 0,

}

EnhancedHouses[i] = EnhancedHouse{

Id: int64(i),

Perimeter: int32(i),

Height: int32(i),

Surface: 0,

Detached: false,

}

}

}

func traverse[T HasPerimeter](arr []T) int32 {

var arbitraryNum int32

for _, item := range arr {

arbitraryNum += item.GetPerimeter()

}

return arbitraryNum

}

func BenchmarkTraverseHouses(b *testing.B) {

for n := 0; n < b.N; n++ {

traverse(Houses)

}

}

func BenchmarkTraverseEnhancedHouses(b *testing.B) {

for n := 0; n < b.N; n++ {

traverse(EnhancedHouses)

}

}The benchmark proves that a deliberate memory optimisation brings markable results.

goos: darwin

goarch: arm64

cpu: Apple M3 Pro

BenchmarkTraverseHouses

BenchmarkTraverseHouses-11 702674 1661 ns/op

BenchmarkTraverseEnhancedHouses

BenchmarkTraverseEnhancedHouses-11 831040 1463 ns/op

PASS

Summary

Memory alignment in Go improves performance by ensuring that data is stored in memory in a way that matches the CPU’s optimal access patterns. It reduces the number of CPU cycles required for reading and writing data, minimising overhead and maximising efficiency.